On her AI Weirdness site, and in her book You Look Like a Thing And I Love You, Janelle Shane has been sharing her experiments with various machine learning models, pointing out the most humorous failures, and then explaining how application of this technology without being mindful of all of its potential and actual, proven pitfalls can do serious harm to society.

Her posts have been less common lately, because the technology (and its ubiquitous mis-application) has advanced to the point where it’s no longer funny. Which depressingly sums up how I feel about it at this point.

This was prompted by an online conversation about AI, but it’s not just a response to that, because it’s something that I’ve been thinking about a lot lately. This is just a lament over the spectacular failure of something that could have been genuinely ground-breaking and useful technology.

Stack Overflow minus assholes

I’m most interested in its applications to computer vision. As someone who started programming on a Commodore 64, it’s still almost preposterously magical that a freely-available system can do accurate hand tracking (and considerably less accurate but still impressive full-body tracking) from a photo or single camera feed. And that’s a somewhat simple case that’s a few years old; other models have already gone beyond that. It’s equally magical that you can run object recognition models on a computer as small as a Raspberry Pi.

It also could’ve been extraordinarily useful to computer programmers. Yes, programming is an “art” as much as a science, but the bulk of the tasks that most programmers are going to be doing day-to-day is implementing new solutions to already-solved problems, and fitting existing libraries or methodologies together to solve a new problem. What if you had a tool that could take a natural-language explanation of the problem that you’re trying to solve, and respond with an (often) functional piece of example code?

Or in other words: what if Stack Overflow was actually useful, instead of being overrun with poorly-socialized assholes who refuse to answer your question and instead either call you stupid, or tell you to RTFM even though you clearly already have?

But even a clear-cut example like that, an application that should’ve been a no-brainer, is so fraught with grossness that it can’t overcome the LLM stink. No, it’s not asking computers to be creative, or even to reason at any meaningful level, but it’s hollowing out and devaluing institutional knowledge.

One of the most common defenses of “AI” is that it’s “democratizing,” but it’s anything but: it treats entry-level positions as easily-interchangeable generators of easily-interchangeable boilerplate code for already-solved problems. It’s short-sighted, because it doesn’t treat the entry-level positions as longer-term investments. It doesn’t appreciate that being able to understand and deduce the solution is what turns entry-level programmers into the type of seasoned veterans who can be assholes on Stack Overflow.

Improving without limit

It should be obvious that applying generative AI to creative roles is even worse. Should be obvious, but since when has that ever stopped a rich person from getting dollar signs in his eyes, or the countless sycophants online who will fall over themselves for the chance to publicly defend them?

People like to point to the nauseatingly horrific images generated by early versions of Google DeepMind, and compare them to the nauseatingly uninspired, same-looking, inartistically lit images generated by current models, and insist that this is a technology that is improving without limit. Which is like suggesting that if Usain Bolt had just kept on training, he would’ve eventually achieved flight.

You can understand why that idea is appealing to people whose accumulation of wealth depends on the notion of lines going ever up without limit. People who have millions or billions of dollars, but still believe they don’t have enough, are not the people you want in charge of making sane and reasonable assessments of the capabilities and applications of technology.

But instead, they point to the examples of genuine advancement, but completely dismiss or outright ignore the most obvious fundamental problems. They insist that the people online are dim-witted luddites for pointing and laughing at how generative AI can’t replace artists because they can’t even get the number of fingers right. Then they say that everyone who questioned them was a fool because look, this model can do five fingers, and also we’ve eliminated or outsourced all of our illustrators.

It’s like opening a new Jurassic Park and saying that we can ignore all of the criticisms of the previous ones, because you see, these dinosaurs have feathers.

Failing upwards

The whole limitless potential/line goes up hype draws obvious comparisons to cryptocurrency, especially since we’ve got the same arrogant dipshits (often, the exact same dipshits) saying that anybody who’s critical of the hype is just too non-technical or just plain dim-witted to understand it. At least that was immediately recognizable as a pyramid scheme, though. And whatever genuine applications of blockchain technology that might exist are so narrow that it resisted attempts to shove it where it doesn’t belong.

Something remarkable about the current “AI” hype is that it runs so deep, it uses criticism as part of the hype. The people publicly talking about these systems will call errors “hallucinations,” or make other similar attempts to anthropomorphize what is essentially an extremely complex predictive text generator.

The page for OpenAI’s “Sora” project to generate video clips has a section with failed videos, including a particularly unsettling one with a woman failing to blow out candles on a cake, with people in the background trying and failing to clap or control their hands correctly. The description OpenAI includes describes it as a “humorous generation” instead of a horrific freakshow. In another, it says that “Sora fails to model the chair as a solid object, leading to inaccurate physical interactions.”

This strikes me as particularly sinister, because it disguises itself as transparency and objective criticism, when it’s actually just more hype. It’s always carefully worded to suggest two things: 1) that these are just bugs that can and will be worked out of systems that are continuously improving without limit, and 2) these systems are actually thinking. If you call a “failure” a “hallucination” enough times, you eventually get people to think of these things not as ML models but as brains.

User error

So on top of the obvious complaints about unethical training data, egregiously inappropriate application of the technology, destroying jobs that executives see as replaceable, degrading search engines and customer service experiences, and the numerous well-documented environmental concerns, it’s all just so disappointing. The applications that could’ve really benefited from this technology have been irreparably tainted by overhype and misuse.

You’ll often see people online pointing and laughing at generative “AI” failing to correctly answer the most basic of questions, for good reason. The most recent one I’ve been seeing is easily-reproducible and repeatable examples of ChatGPT being unable to count the number of the letter b in the word “blueberry.”

“AI” apologists are quick to claim that this is irrelevant, because that’s simply not what these systems were designed to do. But this attempt at a defense just shows how far things have gone off the rails. If you have a software system that gives unpredictably bad results, that’s a broken system. It’s ludicrous to the point of offensive to suggest that known failures of the software are actually just user error. “You’re doing it wrong.”

Also absurd: “Look at all the things it can do, and you’re complaining about this?” It shows a fundamental lack of understanding of what tools are for. A tool has to be trustworthy before you can insist that people everywhere use it. Apple found out after they abandoned Google Maps for their own solution that it’s unacceptable to be correct most of the time. Many people found the experience so unreliable that they never went back, and they still don’t trust Apple’s version. (For what it’s worth: I prefer Apple Maps now, and I use it a lot).

And crucially: it’s recklessly arrogant to present “AI” as a replacement for technology that it doesn’t actually build on or improve on. It’s overkill to ask Wolfram|Alpha to do basic arithmetic, but at least it can do it. Tech giants have replaced previously functional tools with unreliable “AI” systems and responded to obvious errors with just a shrug and a “shit happens, yo.”

I’m grossly simplifying complex and varied systems into what is essentially pattern-matching, mostly because I only have a layman’s understanding of how they work. But it does strike me as particularly offensive that a multi-billion dollar effort doesn’t even bother to fall back to tasks that could easily be handled by grep. It says a lot about the people behind this “AI” push is that they have a system that will generate pages of confident bullshit instead of simply acknowledging “I don’t know.”

Obviously it’s a huge waste of resources to request a complex ML system to do something as simple as counting the number of letters in a word, but that’s not just some random “gotcha” that online luddites have come up with. It’s a huge part of the problem. Using tons of resources to inject “AI” generated text into every Google request is wasteful. Using tons of resources to generate seconds of video that could’ve been made by skilled 3D artists is wasteful. It’s most often just an extremely expensive and resource-intensive solution looking for a problem that’s already been solved.

Why can’t we have nice things

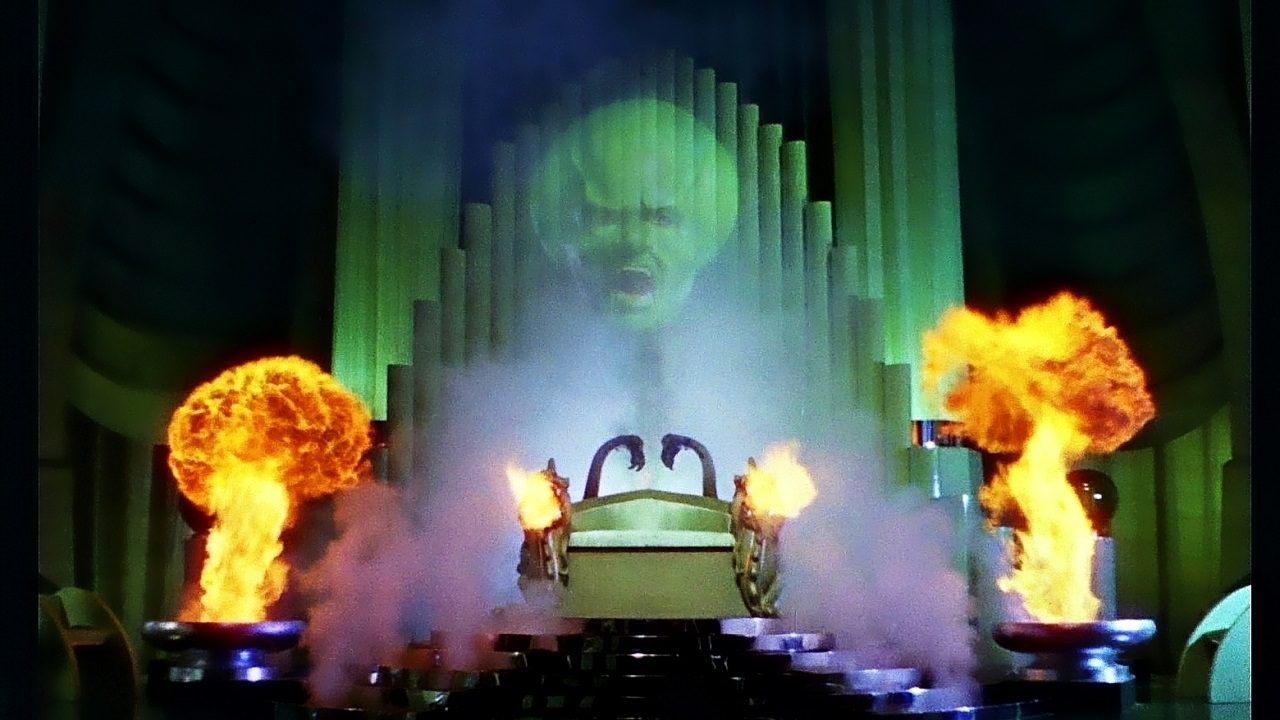

While looking for an image for this post, I just entered “why can’t we have nice things” into Google, and below is a small portion of the page-long response the “AI” generated for me.

It gives an unnecessarily long explanation of the idiom, and then includes a link to a Taylor Swift video that I didn’t ask for. Then there multiple paragraphs with bulleted lists claiming to discuss the expression and its implications, along with another link to the same song, for some reason. At the end is a very small disclaimer saying “AI responses may include mistakes.”

This isn’t going to get passed around as a meme, because it’s not incorrect enough to be interesting. Technically, it’s not incorrect at all. It’s just all completely, depressingly, unnecessary.

It’s all delivered in the style of a BuzzFeed post, or the text at the beginning of an online recipe: it’s probably all grammatically correct, and it’s not egregiously counter-factual or anything, but it simply doesn’t say anything. I can’t imagine how any of it would be useful to anyone. At least, anyone who wasn’t trying to generate filler text to pad out a word count. It is no more and no less than “content.” The best you can say for it is that it takes up space.

I used to be optimistic about how all of this stuff was going to play out. The novelty would wear off, the bubble would burst, and people would eventually realize they’d been taken in by smoke and mirrors. Maybe we’d end up with sane and reasonable applications for machine learning that could be trained ethically and run efficiently.

Now, though, it depressingly seems like the reason people find generative “AI” so appealing is because they’ve devalued not just artists, writers, and programmers, but because they’ve devalued original thought. The people yearn for filler.

Comments

2 responses to “The Tragedy of the Slop”

“The applications that could’ve really benefited from this technology have been irreparably tainted by overhype and misuse.”

Gosh, yes, this. I’m not, in principle, against the use of AI creatively. But that’s the thing: it has to be used, by a human, in a way which offers new creative options – not simply to replicate what humans can do, and especially not entry-level humans.

Yeah, the frequent refrain from AI apologists is familiar from the cryptocurrency bubble: “the future is already here, man! You have to either catch up or be left behind!” They’re using it to defend pushing LLMs and ML in general into places they don’t belong, but my only somewhat optimistic takeaway is that they’re right to some degree: the underlying technology is already here, and it’s available even on small platforms with models that you can train yourself. Even after the bubble bursts, we’re not going to end up in some Butlerian jihad situation where we have computers that can’t even do natural language processing anymore.

The concerning thing is that the gold rush/hype bubble is because the training data sets are so huge, and the resources required are so expensive, that only the largest companies can afford it. Which, obviously, is the opposite of technology that could’ve been democratizing.